Hello @Ford,

Thank you for confirming this is a valuable feature request and for forwarding it to the team. I’m happy to provide more context on why this functionality is important to my workflow.

Why this feature is important to me (the ‘why’):

Currently, I’m using Roboflow for a project involving food item recognition. Besides identifying the brand of the item (which I’m doing via annotation classes), I also need to categorize each image by its specific flavor profiles (e.g., ‘chocolate’, ‘vanilla’, ‘spicy’, ‘original’, etc.). The image-level tagging feature in Roboflow is perfectly suited for this, as a single image can have multiple flavor tags, and these tags describe the image as a whole, not just a specific object bounding box.

The challenge arises when I need to analyze my dataset. I require a comprehensive CSV output that includes:

- The

filename

- The

brand name(s) (from object detection classes)

- The

flavor tag(s) (from image-level tags)

Without a direct way to export image-level tags, I’m left with a critical gap in my dataset analysis.

What exactly I’m trying to accomplish (the ‘what’):

I’m trying to create a unified dataset manifest. This single CSV file would serve as my master record for all images, allowing me to quickly see:

- Which brands are present in an image.

- Which flavors are associated with that image.

- This combined information is essential for downstream tasks like:

- Data exploration and quality control: Easily auditing my dataset to ensure all relevant information (brand and flavor) is captured for each image.

- External analysis: Performing analytics on the combined brand and flavor distribution across my entire dataset using tools outside of Roboflow.

- Reporting: Generating comprehensive reports that include both object-level classifications and image-level attributes.

How it would improve my experience with Roboflow (the ‘how it helps’):

- Streamlined Workflow: Currently, I have to manually manage this data or develop custom scripts to combine multiple exports. Having a direct export for image-level tags would eliminate this time-consuming, error-prone manual step.

- Data Integrity: A direct export ensures that the tags I apply in Roboflow are readily accessible for my analysis without needing complex data merging, reducing the risk of discrepancies.

- Full Utilization of Roboflow Features: It allows me to fully leverage the robust tagging feature for dataset organization and metadata, knowing that this information is easily extractable for my specific analytical needs. It makes the tagging feature much more powerful for data management.

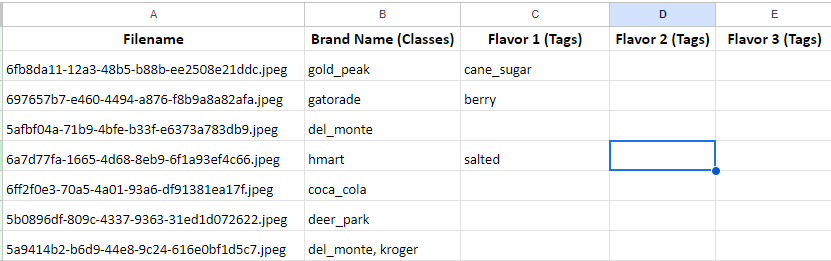

Screenshot:

Final Solution as I was searching for:

While I await potential future developments from Roboflow, I wanted to let you know that I have developed a custom Streamlit application to address this exact problem for my current needs.

This app allows me to generate the combined CSV output I require by leveraging Roboflow’s existing export formats.

App Link: https://brand-flavor-dataextractor-roboflow.streamlit.app

Here’s a brief explanation of how my app works:

-

Input: My Streamlit app takes the COCO JSON export directly from my Roboflow Object Detection project as input. This JSON file contains all the necessary annotation data (filenames, bounding boxes, and class names for my brands).

-

Processing:

- The app parses this COCO JSON to extract the

filename and associated class name(s) (my brand names) for each image.

- Crucially, for the image-level tags (flavors), the app expects these to be present within the

image objects in the COCO JSON metadata. While not explicitly part of the standard COCO annotation, Roboflow often embeds additional image metadata (like source file names, tags, etc.) in the images section of the COCO JSON. My app specifically looks for these tag-related fields.

- It then combines this extracted information, mapping each image to its detected brand classes and its assigned flavor tags.

- Output: The app generates a clean CSV file in my desired format:

FileName | Brand Name(s) | Flavor Tag(s).

This custom solution helps me bridge the current gap, allowing me to fully utilize both my object detection annotations and my image-level tags for my analysis and reporting.

I hope this provides further insight into the importance and utility of this feature.

Thank you for your continued support!