Thanks, @Ford. I definitely get that blocks should be as atomic as possible; general good software engineering should follow the “do one thing” paradigm. I was asking this question because I was wonder if there were tools or techniques associated with Roboflow for doing what I was asking, and maybe the answer, based on what you said, is “no”. Let me be a little more explicit in the issue we faced.

I assume that Roboflow is meant to allow people who don’t want to get hung up on writing their own workflow infrastructure logic, which means a lot of the customers might be people who are not particularly adept at python programming - this certainly describes my son. So they’re going to make a lot of coding errors, even in simple blocks that are less than 40 lines long.

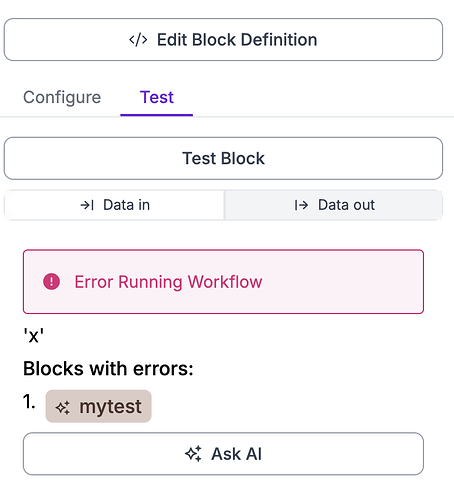

Here’s an example of the problem he faced. He was trying to get some basic info out of the Detections object, but because he was unfamiliar with the data structure, he didn’t know the precise command, so he was trying to grab an ‘x’ parameter out of the data element:

x1 = pred.data['x']

This produced the error message:

Since the block referenced x in several other sections, without line numbers the only way for him to track down what was wrong was to comment out lines, then progressively uncomment them until he figured out what was causing the problem.

The immediate solution to this would be to just get better error reporting in the UI (unless this already exists, and you can help me out in showing how to make this happen).

But it also points to a larger issue in terms of integrating block code we want to maintain outside the context of the Roboflow app. Ideally, we would want all our custom python blocks to be checked into a code repository, along with a unit test for each block. But we need a way to run the block outside of roboflow for this to work.

My dream feature: In addition to having the “Test Block” button in the UI, have an “export test harness” button that would create a python script that could invoke the block in the normal python interpreter (along with the data context that is created the when you run the test in the UI, such as the specific input media and outputs of any upstream blocks). This script wouldn’t run the upstream modules, it would just set up the data environment (hardcoded into the script) from the output of the test that you just ran. It wouldn’t even need visibility to Roboflow libraries (other than definitions of data structures).

This exported test harness could be checked into the repository where you work on the python block and used in CI pipelines (so python blocks could be tested for validity prior to integration into a workflow), as well as allowing you to code your block in something like PyCharm which would let you use all the useful python IDE features, including command completion and data inspection to more easily work with the context data in the workflow.