Hello Roboflow community,

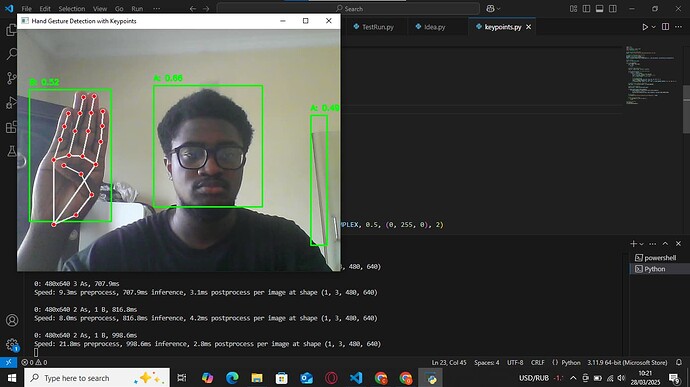

My name is Philip and I’m working on a hand gesture recognition model using YOLO for Sign Language. However, I’m facing an issue where the trained model sometimes detects my face or random background objects as a hand gesture. I carried out annotation of my hand gesture datasets (I only annotated 2 classes: A and B; cause I wanted to see how it works) on roboflow and trained the model using google colab. I also used 100 images for each of these classes.

Here is what I’ve done:

I’ve tried adjusting confidence & IoU thresholds but the issue persists.

Increased training epochs to 100.

Used bounding boxes and hand landmarks for annotation.

Project Type: Object Detection

Model Used: YOLOv8

Operating System & Browser: Windows 10 and Chrome

Project Universe Link or Workspace/Project ID: Serious Object Detection Dataset by Example

Below is an example of what I meant.

I’d really appreciate any advice on resolving this issue. Thanks in advance!